Security Operations

1. Overview

1.1 What is Meghna Cloud?

In the contemporary digital landscape, cloud computing assumes a pivotal role in technological advancement, reshaping the operational dynamics for both businesses and individuals. Within the realm of numerous cloud service providers, Meghna Cloud distinguishes itself as a beacon of innovation and dependability. Established through a prestigious collaboration between GenNext Technologies Limited and Bangladesh Data Center Company Limited (BDCCL), Meghna Cloud transcends conventional service paradigms—it embodies a commitment to excellence and represents a stride into the future.

1.2 Importance of Cloud Security

We are committed to ensuring the accessibility, privacy, and integrity of our clients' data, positioning ourselves as pioneers in cloud computing services. Our dedication is evident in the implementation of a strong defense for our cloud infrastructure through extensive security procedures. These measures encompass advanced intrusion prevention, penetration testing, vulnerability assessments, and the integration of threat intelligence, showcasing our commitment to maintaining the highest standards of data protection and security across various industries.

2. Security Operations Center

2.1 What is a Security Operations Center (SOC)?

A security operations center (SOC) – sometimes called an information security operations center, or ISOC – is an in-house or outsourced team of IT security professionals that monitors an organization’s entire IT infrastructure, 24/7, to detect cybersecurity events in real time and address them as quickly and effectively as possible.

An SOC also selects, operates, and maintains the organization’s cybersecurity technologies, and continually analyzes threat data to find ways to improve the organization's security posture.

The chief benefit of operating or outsourcing an SOC is that it unifies and coordinates an organization’s security tools, practices, and response to security incidents. This usually results in improved preventative measures and security policies, faster threat detection, and faster, more effective and more cost-effective response to security threats. An SOC can also improve customer confidence, and simplify and strengthen an organization's compliance with industry, national and global privacy regulations.

2.2 What a Security Operations Center (SOC) does?

SOC activities and responsibilities fall into three general categories.

1. Preparation, Planning and Prevention

Asset inventory: An SOC needs to maintain an exhaustive inventory of everything that needs to be protected, inside or outside the data center (e.g. applications, databases, servers, cloud services, endpoints, etc.) and all the tools used to protect them (firewalls, antivirus/antimalware/anti-ransomware tools, monitoring software, etc.). Many SOCs will use an asset discovery solution for this task.

Routine maintenance and preparation: To maximize the effectiveness of security tools and measures in place, the SOC performs preventative maintenance such as applying software patches and upgrades, and continually updating firewalls, whitelists and blacklists, and security policies and procedures. The SOC may also create system back-ups – or assist in creating backup policy or procedures – to ensure business continuity in the event of a data breach, ransomware attack or other cybersecurity incident.

Incident response planning: The SOC is responsible for developing the organization's incident response plan, which defines activities, roles, responsibilities in the event of a threat or incident – and the metrics by which the success of any incident response will be measured.

Regular testing: The SOC team performs vulnerability assessments – comprehensive assessments that identify each resource's vulnerability to potential threats, and the associate costs. It also conducts penetration tests that simulate specific attacks on one more system. The team remediates or fine-tunes applications, security policies, best practices and incident response plans based on the results of these tests.

Staying current: The SOC stays up to date on the latest security solutions and technologies, and on the latest threat intelligence – news and information about cyberattacks and the hackers of perpetrate them, gathered from social media, industry sources, and the dark web.

2. Monitoring, Detection and Response

Continuous, around-the-clock security monitoring: The SOC monitors the entire extended IT infrastructure – applications, servers, system software, computing devices, cloud workloads, the network - 24/7/365 for signs of known exploits and for any suspicious activity. For many SOCs, the core monitoring, detection and response technology has been security information and event management, or SIEM. SIEM monitors and aggregates alerts and telemetry from software and hardware on the network in real time, and then analyzes the data to identify potential threats. More recently, some SOCs have also adopted extended detection and response (XDR) technology, which provides more detailed telemetry and monitoring, and the ability to automate incident detection and response.

Log management: Log management – the collection and analysis of log data generated by every network event – is a subset of monitoring that's important enough to get its own paragraph. While most IT departments collect log data, it's the analysis that establishes normal or baseline activity, and reveals anomalies that indicate suspicious activity. In fact, many hackers count on the fact that companies don't always analyze log data, which can allow their viruses and malware to run undetected for weeks or even months on the victim's systems. Most SIEM solutions include log management capability.

Threat detection: The SOC team sorts the signals from the noise - the indications of actual cyberthreats and hacker exploits from the false positives - and then triages the threats by severity. Modern SIEM solutions include artificial intelligence (AI) that automates these processes 'learns' from the data to get better at spotting suspicious activity over time.

Incident response: In response to a threat or actual incident, the SOC moves to limit the damage. Actions can include:

- Root cause investigation, to determine the technical vulnerabilities that gave hackers access to the system, as well as other factors (such as bad password hygiene or poor enforcement of policies) that contributed to the incident

- Shutting down compromised endpoints or disconnecting them from the network.

- Isolating compromised areas of the network or rerouting network traffic.

- Pausing or stopping compromised applications or processes.

- Deleting damaged or infected files.

- Running antivirus or anti-malware software.

- Decommissioning passwords for internal and external users

Many XDR solutions enable SOCs to automate and accelerate these and other incident responses.

3. Recovery, Refinement and Compliance

Recovery and remediation: Once an incident is contained, the SOC eradicates the threat, then works to the impacted assets to their state before the incident (e.g. wiping, restoring and reconnecting disks, end-user devices and other endpoints; restoring network traffic; restarting applications and processes). In the event of a data breach or ransomware attack, recovery may also involve cutting over to backup systems, and resetting passwords and authentication credentials.

Post-mortem and refinement: To prevent a recurrence, the SOC uses any new intelligence gained from the incident to better address vulnerabilities, update processes and policies, choose new cybersecurity tools or revise the incident response plan. At a higher level, SOC team may also try to determine if the incident reveals a new or changing cybersecurity trend for which the team needs to prepare.

Compliance management: It's the SOC's job to ensure all applications, systems, and security tools and processes comply with data privacy regulations such as GDPR (Global Data Protection Regulation), CCPA (California Consumer Privacy Act), PCI DSS (Payment Card Industry Data Security Standard, and HIPAA (Health Insurance Portability and Accountability Act). Following an incident, the SOC makes sure that users, regulators, law enforcement and other parties are notified in accordance with regulations, and that the required incident data is retained for evidence and auditing.

3. Key Security Operations Center (SOC) Team Members

In general, the chief roles on an SOC team include:

The SOC manager, who runs the team, oversees all security operations, and reports to the organization's CISO (chief information security officer).

Security engineers, who build out and manage the organization's security architecture. Much of this work involves evaluating, testing, recommending, implementing and maintaining security tools and technologies. Security engineers also work with development or DevOps/DevSecOps teams to make sure the organization's security architecture is included application development cycles.

Security analysts – also called security investigators or incident responders – who are essentially the first responders to cybersecurity threats or incidents. Analysts detect, investigate, and triage (prioritize) threats; then they identify the impacted hosts, endpoints and users, and take the appropriate actions to mitigate and contain the impact or the threat or incident. (In some organizations, investigators and incident responders are separate roles classified as Tier 1 and Tier 2 analysts, respectively.)

Threat hunters (also called expert security analysts) specialize in detecting and containing advanced threats – new threats or threat variants that manage to slip past automated defenses.

The SOC team may include other specialists, depending on the size of the organization or the industry in which it does business. Larger companies may include a Director of Incident Response, responsible for communicating and coordinating incident response. And some SOCs include forensic investigators, who specialize in retrieving data – clues – from devices damaged or compromised in a cybersecurity incident.

4. Our Methodology

During the customer onboarding process, we undertake a complimentary general vulnerability assessment as an initial step to mitigate any potential security threats before any client data is introduced into our cloud environment. It is our priority to ensure that our clients' information remains secure from the outset.

Furthermore, we offer comprehensive security assessment and testing services upon client request. These services are designed to meticulously identify any misconfigurations or other security vulnerabilities that the client may wish to uncover and subsequently address. Our aim is to provide clients with the tools and insights necessary to fortify their security posture, thereby safeguarding their data and assets effectively.

4.1 Initial Vulnerability Assessment

Meghna Cloud is committed to ensuring the utmost security and protection of client data and applications. In our complementary initial vulnerability assessment for client onboarding, we provide a range of vital scans to thoroughly evaluate and fortify your network infrastructure. Our network-based scans delve into both wired and wireless networks, identifying potential security threats and vulnerabilities, including unauthorized devices and insecure connections. Our host-based scans meticulously examine servers, workstations, and network hosts, offering insights into configuration settings and update histories.

Additionally, we conduct wireless scans to pinpoint rogue access points, ensuring the secure configuration of your network. Our application scans meticulously examine websites, uncovering known software vulnerabilities and potential security issues in network and web application configurations. Lastly, our database scans identify weaknesses in database configurations, providing actionable recommendations to bolster your defenses. These scans can be executed in various ways, including external scans targeting internet-accessible infrastructure, internal scans fortifying networkaccessible systems, authenticated scans to view vulnerabilities from verified user perspectives, and unauthenticated scans simulating potential external threats, all tailored to ensure comprehensive security coverage for your organization.

4.2 Comprehensive Vulnerability Assessment

Our comprehensive vulnerability assessment services are designed for clients who require advanced security measures for regulatory compliance and/or compliance with industry specific benchmarks/standardizations. The details of our assessment approach are provided below. For pricing information of any comprehensive vulnerability assessment services, please contact with your Meghna Cloud point of contact or our sales team through tech@gennext.net.

4.2.1 VAPT

Based on network layer assessment report with common attacks and existing exploits.

Vulnerability Assessment (for application layer):

Customized vulnerability assessment will be performed which will include the servers.

Advanced Penetration Testing:

Custom testing exploits will be developed by our experts based on discovered vulnerabilities.

4.2.2 Server Hardening

IPTables Configure:

Iptables is a user-space utility program that allows a system administrator to configure the IP packet filter rules of the Linux kernel firewall, implemented as different Netfilter modules. The filters are organized in different tables, which contain chains of rules for how to treat network traffic packets.

Different kernel modules and programs are currently used for different protocols; iptables applies to IPv4, ip6tables to IPv6, ARPtables to ARP, and EBtables to Ethernet frames.

File & LVM encryption Enable:

Install or enable encrypted LVM, which allows all partitions in the logical volume, including swap, to be encrypted. Encrypted Private Directories were implemented, utilizing eCryptfs, in ubuntu as a secure location for users to store sensitive information. The server and alternate installers had the option to setup an encrypted private directory for the first user.

In Ubuntu, support for encrypted home and filename encryption was added. Encrypted Home allowed users to encrypt all files in their home directory and was supported in the Alternate Installer and also in the Desktop Installer via the pressed option user-setup/encrypt-home=true. Official support for Encrypted Private and Encrypted Home directories was dropped in Ubuntu LTS. It is still possible to configure an encrypted private or home directory, after Ubuntu is installed, with the ecryptfs-setupprivate utility provided by the ecryptfs-utils package.

SYSCTL configure with Syscall filtering and block Kexec:

Programs can filter out the availability of kernel syscalls by using the seccomp_filter interface. This is done in containers or sandboxes that want to further limit the exposure to kernel interfaces when potentially running untrusted software. It is now possible to disable kexec via sysctl. CONFIG_KEXEC is enabled in Ubuntu so end users are able to use kexec as desired and the new sysctl allows administrators to disable kexec_load. This is desired in environments where CONFIG_STRICT_DEVMEM and modules_disabled are set, for example. When Secure Boot is in use, kexec is restricted by default to only load appropriately signed and trusted kernels.

Usbguard:

Usbguard package has been available in universe to provide a tool for using the Linux kernel's USB authorization support to control device IDs and device classes that will be recognized.

Usbauth:

Usbauth package has been available in universe to provide a tool for using the Linux kernel’s USB authorization support, to control device IDs and device classes that will be recognized. Additionally, the following will be ensured from Meghna Cloud:

- AppArmor configure with all security packages

- Server patch update after the assessment

- Device restriction

- Access log monitoring

- TPM Enable

4.2.3 Security Information and Event Management (SIEM)

Wazuh aggregates, stores, and analyzes security event data to identify anomalies or indicators of compromise. The SIEM platform adds contextual information to alerts to expedite investigations and reduce average response time. It scans the systems against the Center for Internet Security (CIS) benchmark to allow to identify and remediate vulnerabilities, misconfigurations, or deviations from best practices and security standards. It also helps to track and demonstrate compliance with various regulatory frameworks such as PCI DSS, NIST 800-53, GDPR, TSC SOC2, and HIPAA.

Wazuh prioritizes identified vulnerabilities to speed up the decision-making and remediation process. The Wazuh vulnerability detection capability ensures to meet regulatory compliance requirements while reducing the attack surface. Wazuh correlates events from multiple sources, integrates threat intelligence feeds, and provides customizable dashboards and reports. It can customize alerts to meet specific requirements. This allows security teams to respond quickly to threats and minimize the impact of security incidents. Wazuh allows to generate comprehensive, actionable information that meets all unique needs. It reports to demonstrate compliance with various regulations and standards.

4.2.4 Extended Detection and Response (XDR)

Wazuh offers several advantages as an open-source XDR platform. It is customizable and can be modified to meet specific needs, giving greater flexibility and control over environment. It has a large community of users and developers who provide support and expertise. Furthermore, it integrates with a broad range of security solutions, allowing to create a comprehensive security ecosystem.

The Wazuh behavioral analysis capabilities involve using advanced analytics to identify deviations from normal behavior, which may indicate potential security threats. These capabilities include monitoring file integrity, network traffic, user behavior, and anomalies in system performance metrics.

Wazuh maps detected events to the relevant adversary tactics and techniques. It also ingests third-party threat intelligence data and allows to create custom queries to filter events and aid threat hunting. It automatically responds to threats to mitigate the potential impact on infrastructure and built-in response actions or create custom actions according to incident response plan. It has a built-in integration with cloud services to collect and analyze telemetry.

It protects native and hybrid cloud environments including container infrastructure by detecting and responding to current and emerging threats. It integrates with threat intelligence sources, including open-source intelligence (OSINT), commercial feeds, and user-contributed data to provide up-to-date information on potential threats.

Wazuh agent runs on the most common operating systems to detect malware, perform file integrity monitoring, read endpoint telemetry, perform vulnerability assessment, scan system configuration, and automatically respond to threats. It ingests telemetry via syslog or APIs from third-party applications, devices, and workloads like cloud providers and SaaS vendors.

4.2.5 Auto VA Platform

OpenVAS is a fully featured Vulnerability Management Software designed to serve Agencies, Enterprises. OpenVAS provides end-to-end solutions designed for end-users. This online Vulnerability Management system offers Prioritization, Asset Tagging, Web Scanning, Asset Discovery, Risk Management at one place.

Wazuh prioritizes identified vulnerabilities to speed up the decision-making and remediation process. The Wazuh vulnerability detection capability ensures to meet regulatory compliance requirements while reducing the attack surface.

4.2.6 Endpoint Protection

Files will be scanned quickly due to amazing optimization. ClamAV is able to detect millions of viruses, worms, ransomwares, trojans, mobile malware, and even Microsoft Office macro viruses. Real-time protection is only available for Linux systems. ClamScan or ClamD provides an option for blocking the file access until it has been scanned for malware. Signature databases will ensure that only trusted databases will be used by ClamAV. It is also able to scan archives and compressed files. It detects any suspicious files; it automatically sends them to the cloud sandbox for further analysis so that they cannot perform any malicious activity.

4.2.7 Data Loss Prevention

OpenDLP is a free and open source, agent- and agentless-based, centrally managed, massively distributable data loss prevention tool released under the GPL. Given appropriate Windows, UNIX, MySQL, or MSSQL credentials, OpenDLP can simultaneously identify sensitive data at rest on hundreds or thousands of Microsoft Windows systems, UNIX systems, MySQL databases, or MSSQL databases from a centralized web application.

OpenDLP has two components:

A web application to manage Windows agents and Windows/UNIX/database agentless scanners

A Microsoft Windows agent used to perform accelerated scans of up to thousands of systems simultaneously.

The web application automatically deploys and starts the agents over Netbios/SMB. When done, it automatically stops, uninstalls, and deletes the agents over Netbios/SMB. For a better overview, it performs the following:

- Pauses, resumes, and forcefully uninstalls agents in an entire scan or on individual systems

- Concurrently and securely receives results from hundreds or thousands of deployed agents over two-way-trusted SSL connection

- Creates Perl-compatible regular expressions (PCREs) for finding sensitive data at rest

- Creates reusable profiles for scans that include whitelisting or blacklisting directories and file extensions

- Reviews findings and identify false positives

- Exports results as XML

- Writes in Perl with MySQL backend

- Deploys Windows agents through existing Meterpreter sessions (new in 0.5)

The agent performs the following activities:

- Runs on Windows 2000 and later systems

- Writes in C with no .NET Framework requirements

- Runs as a Windows Service at low priority so users do not see or feel it

- Resumes automatically upon system reboot with no user interaction

- Securely transmit results to web application at user-defined intervals over two-way-trusted SSL connection

- Uses PCREs to identify sensitive data inside files

- Performs additional checks on potential credit card numbers to reduce false positives

- It can read inside ZIP files, including Office 2007 and OpenOffice files

- It limits itself to a percent of physical memory so there is no thrashing when processing large files

Agentless Database Scans:

In addition to performing data discovery on Windows operating systems, Open DLP also supports performing agentless data discovery against the following databases: Microsoft SQL server MySQL Agentless File System and File Share Scans:

With Open DLP 0.4, one can perform the following scans:

- Agentless Windows file system scan (over SMB)

- Agentless Windows share scan (over SMB)

- Agentless UNIX file system scan (over SSH using sshfs)

4.2.8 Identity and Access Management

Single-Sign On:

Users authenticate with Keycloak rather than individual applications. This means that your applications do not have to deal with login forms, authenticating users, and storing users. Once logged-in to Keycloak, users do not have to login again to access a different application. This also applies to logout. Keycloak provides single-sign out, which means users only have to logout once to be logged-out of all applications that use Keycloak.

Identity Brokering and Social Login:

Enabling login with social networks is easy to add through the admin console. It is just a matter of selecting the social network you want to add. No code or changes to your application is required. Keycloak can also authenticate users with existing OpenID Connect or SAML 2.0 Identity Providers. Again, this is just a matter of configuring the Identity Provider through the admin console.

User Federation:

Keycloak has built-in support to connect to existing LDAP or Active Directory servers. You can also implement your own provider if you have users in other stores, such as a relational database.

Admin Console:

Through the admin console administrators can centrally manage all aspects of the Keycloak server. They can enable and disable various features. They can configure identity brokering and user federation. They can create and manage applications and services and define fine-grained authorization policies. They can also manage users, including permissions and sessions.

Account Management Console:

Through the account management console users can manage their own accounts. They can update the profile, change passwords, and setup two-factor authentication. Users can also manage sessions as well as view history for the account. If you've enabled social login or identity brokering users can also link their accounts with additional providers to allow them to authenticate to the same account with different identity providers.

Standard Protocols:

Keycloak is based on standard protocols and provides support for OpenID Connect, OAuth 2.0, and SAML.

Authorization Services:

If role-based authorization doesn't cover your needs, Keycloak provides fine-grained authorization services as well. This allows you to manage permissions for all your services from the Keycloak admin console and gives you the power to define exactly the policies you need.

4.2.9 Intrusion Prevention Systems

Snort is the foremost Open-Source Intrusion Prevention System (IPS). Snort IPS uses a series of rules that help define malicious network activity and uses those rules to find packets that match against them and generates alerts for users. Snort can be deployed inline to stop these packets, as well. Snort has three primary uses: As a packet sniffer like tcpdump, as a packet logger — which is useful for network traffic debugging, or it can be used as a full-blown network intrusion prevention system.

4.2.10 VPN

It works in any configuration including remote access, site to site VPNs, Wi-Fi security and enterprise scale access solutions. It has features like load balancing, failover and access controls. It can tunnel IP sub-networks or virtual Ethernet adapters. Open VPN benefits are: Supports perfect forward secrecy, has firewall compatibility and Better Security (256-bit encryption keys).

4.2.11 Security Analyst Consultation

Meghna Cloud has a dynamic security team with a wide array of expertise and experience, both internationally and domestically. Our competent security analysts are able to provide on-site and offsite, 24/7/365 service being fully committed to secure client assets and fulfill their requirements.

4.3 Security Framework

In order to protect client data and guarantee the integrity of its cloud services, we are dedicated to upholding the strictest security requirements and putting in place a strong framework. With the use of cutting-edge technologies and techniques, the security architecture is made to handle a wide variety of potential attacks.

4.3.1 Planning & Security Tools Preparation

| Strategy | Preparations |

|---|---|

| Assessment and testing planning | Planning strategically for thorough security evaluations |

| Passive information gathering | Collecting non-intrusive data for analysis |

| Reporting Template | Developing templates for standardized reporting |

| Initial hardening | Preliminary security measures are being put in place. |

| Security tools preparing | Ensuring tools are ready for use in security protocols |

Table 4.1: Planning & Security Tools Preparation

4.3.2 Vulnerability Assessment

| Layer | Tools | Descriptions |

|---|---|---|

| Network | OpenVAS | Assesses vulnerabilities in networking devices, servers, operating systems, and other server applications. |

| Application | Custom & Developed Apps | Comprehensive vulnerability assessment of apps and associated servers |

Table 4.2: Vulnerability Assessment Tools

4.3.3 Penetration Testing

| Testing | Tools | Descriptions |

|---|---|---|

| Basic | MetasploIt | Utilized for basic penetration testing, focusing on common attacks and existing exploits |

| Advanced | Custom Exploits | Advanced testing platform design and implementation with specific attacks depending on identified vulnerabilities |

Table 4.3: Penetration Testing Tools

4.3.4 Server Hardening

| Security Measures | Details |

|---|---|

| IPTables Configure | IPTables is a powerful network firewall tool that can be used to control and secure network traffic. |

| File & LVM Encryption | Logical Volume Manager (LVM) and encryption of files ensure robust data security and flexible storage management. |

| SYSCTL | Optimizing and securing system settings through SYSCTL configuration |

| USB-Guard | Utilization of USB-Guard for USB device control and prevention of unauthorized access. |

| App-Armor configure | Comprehensive application protection with App-Armor configuration and security packages. |

| Server Patch Update | Regularly apply patches and updates to address vulnerabilities identified by security assessments. |

| Device Restriction | Implementation of granular device restrictions to bolster overall system security. |

| Access Log Monitoring | Ongoing monitoring of access logs to detect and respond to anomalous activities. |

Table 4.4: Server Hardening Process

4.4 SOC Implementation

The implementation of a robust Security Operations Center (SOC) is essential for ensuring the security, compliance, and confidentiality of Meghna Cloud services. The SOC encompasses a variety of tools and strategies for incident detection, prevention, response, and threat intelligence, enabling the Meghna Cloud team to proactively monitor and respond to potential security threats.

| Aspects | Tools | Features | Descriptions |

|---|---|---|---|

| SIEM | Wazuh | - | Wazuh, a comprehensive SIEM solution, facilitates security event analysis, incident management, and regulatory compliance reporting |

| XDR | Wazuh | - | We leverage Wazuh, an XDR platform, for advanced threat detection, hunting, response, and compliance. |

| Threat Intelligence | Mitre | - | We use a comprehensive threat intelligence approach that includes statistics, insider threat analysis, OSquery, the Mitre Attack framework, Docker listening, and VirusTotal integration. |

| IDS | Suricate | Log-Based IDS | Monitors system and application logs for indicators of compromise. |

| Host-based IDS | Analyzes host activity for actionable insights. | ||

| Rootkit & Malware DS | Protects systems from rootkits and malware. | ||

| Real-time Alert & Notification | Generates immediate alerts for real-time security threats. | ||

| Multi-layer | Employs a multi-layered defense strategy to ensure robust security. | ||

| Firewall | - | IPtables | Maintains network security through packet filtering. |

| Antivirus | - | ClamAV | Provide antivirus protection for servers. |

Table 4.5: SOC Implementation Tools

4.5 Vulnerability Assessment Platform

We prioritize robust Vulnerability Assessment (VA) Platform as an integral part of its comprehensive security infrastructure. VA Platform are designed to proactively identify, assess, and manage vulnerabilities across network and application layers, ensuring a secure cloud environment for our clients.

| Aspects | Key points | Descriptions |

|---|---|---|

| Auto VA | Tool Used | Wazuh & Open VAS |

| Functionality | Automated and comprehensive vulnerability assessment of network infrastructure | |

| Components | Integrated Wazuh and OpenVAS for threat detection and vulnerability scanning. | |

| Coverage | Comprehensive IT infrastructure management, encompassing networking devices, servers, OS, and other server applications. | |

| Scanning Types | Full, partial, and baseline scans provide comprehensive data protection, tailored to specific needs. | |

| Platform Purpose | Proactively identify and remediate vulnerabilities to ensure the security of systems and data. | |

| Manual VA | Framework Design | Manual vulnerability assessment platform for developed or customized applications. |

| Tool Used | Tools customized to address assessment findings | |

| Scanning Types | Comprehensive evaluation of developed or tailored software. | |

| Platform Purpose | Advanced testing platform design with customized exploits for assessed vulnerabilities. |

Table 4.6: Vulnerability Assesment Process

4.5.1 Application VA

Meghna Cloud goes beyond conventional security measures by incorporating a specialized Application Vulnerability Assessment (AVA) platform. This integral facet of our security strategy involves a meticulous assessment of all developed or customized applications. Our dedicated security team evaluates the application layer, identifying and remediating vulnerabilities unique to each application. This ensures that the software running on our cloud infrastructure meets the highest standards of security and resilience.

4.6 Threat Intelligence

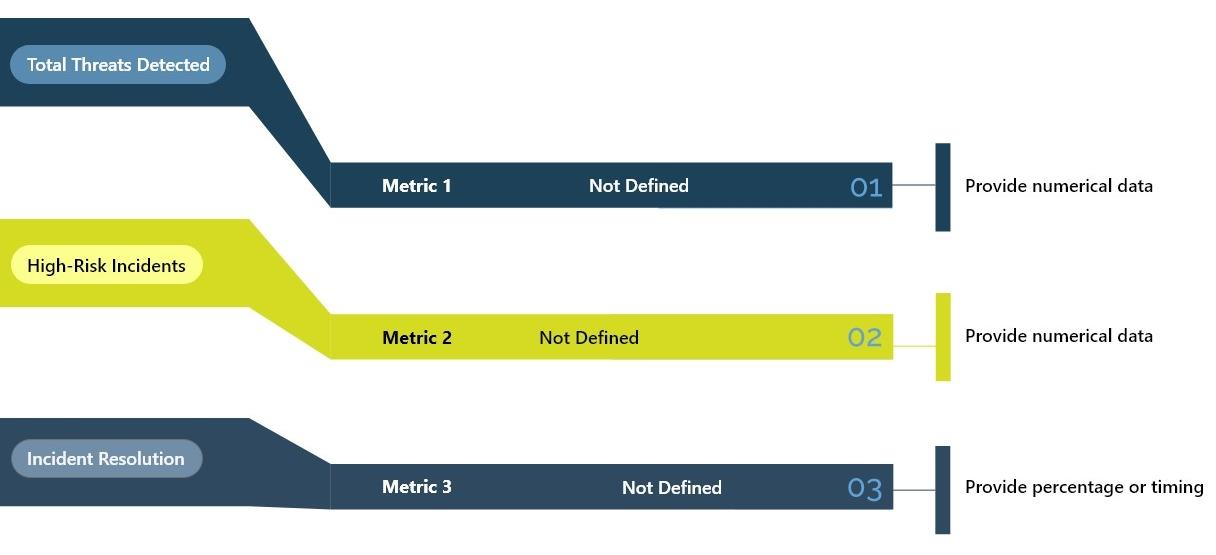

4.6.1 Threat statistics

4.6.2 Insider Threat Analysis

Insider threats, a pervasive and evolving menace to data security, necessitate continuous monitoring and evaluation of user activities to detect anomalous behavior indicative of internal security risks. Regular assessments and proactive measures, including security awareness training, access control policies, and incident response planning, are essential to mitigate insider threats, safeguard sensitive data, and ensure organizational integrity.

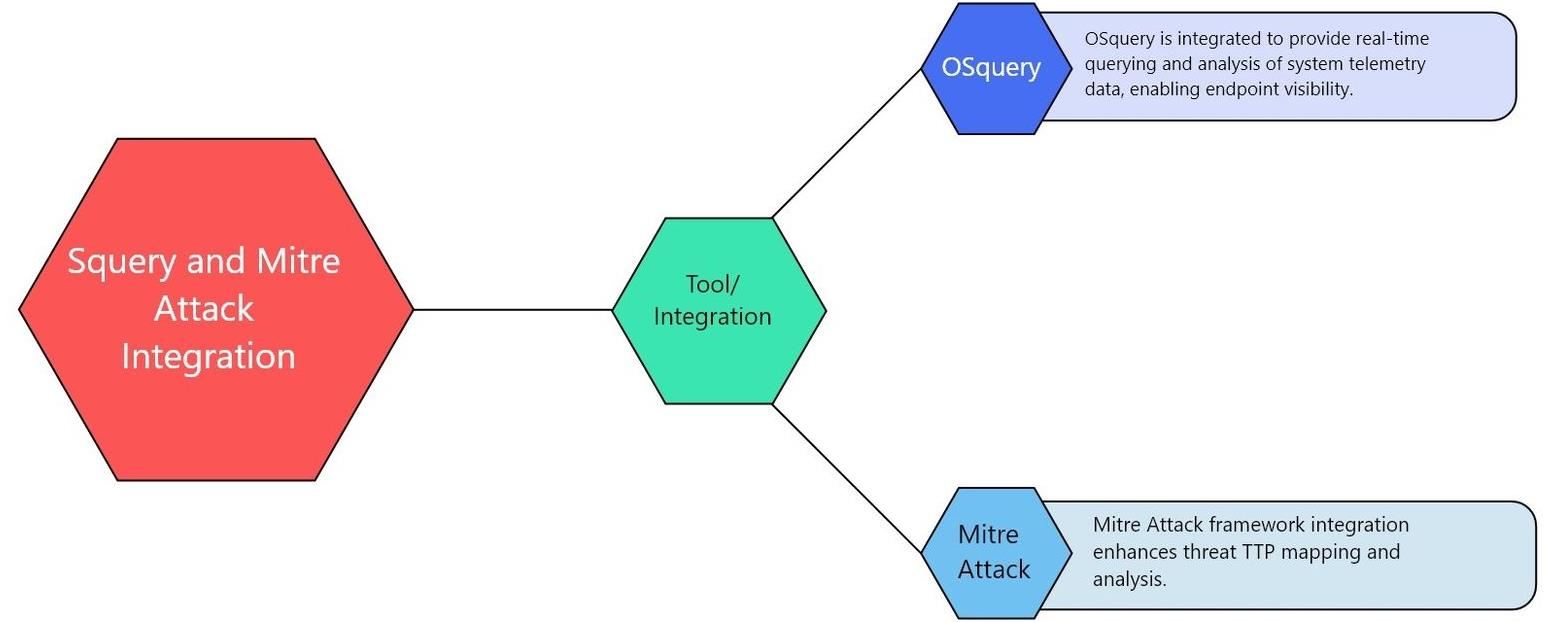

4.6.3 OSquery and Mitre Attack Integration

4.6.4 Docker Listening and VirusTotal Integration

| Aspects | Overview | Objective |

|---|---|---|

| Docker Listening | Continuous Docker environment monitoring for anomalous activity. | Early detection of unauthorized access or malicious activity in Docker containers. |

| VirusTotal Integration | VirusTotal integration for file and URL analysis. | Strengthen threat intelligence by harnessing the vast database of malware signatures and behaviors. |

4.7 Incident Response

4.7.1 Security Log Analysis

We leverage advanced log analysis tools, including security information and event management (SIEM) systems, to collect and analyze logs from a variety of sources in real time. This enables Meghna Cloud to rapidly identify security incidents and mitigate potential threats

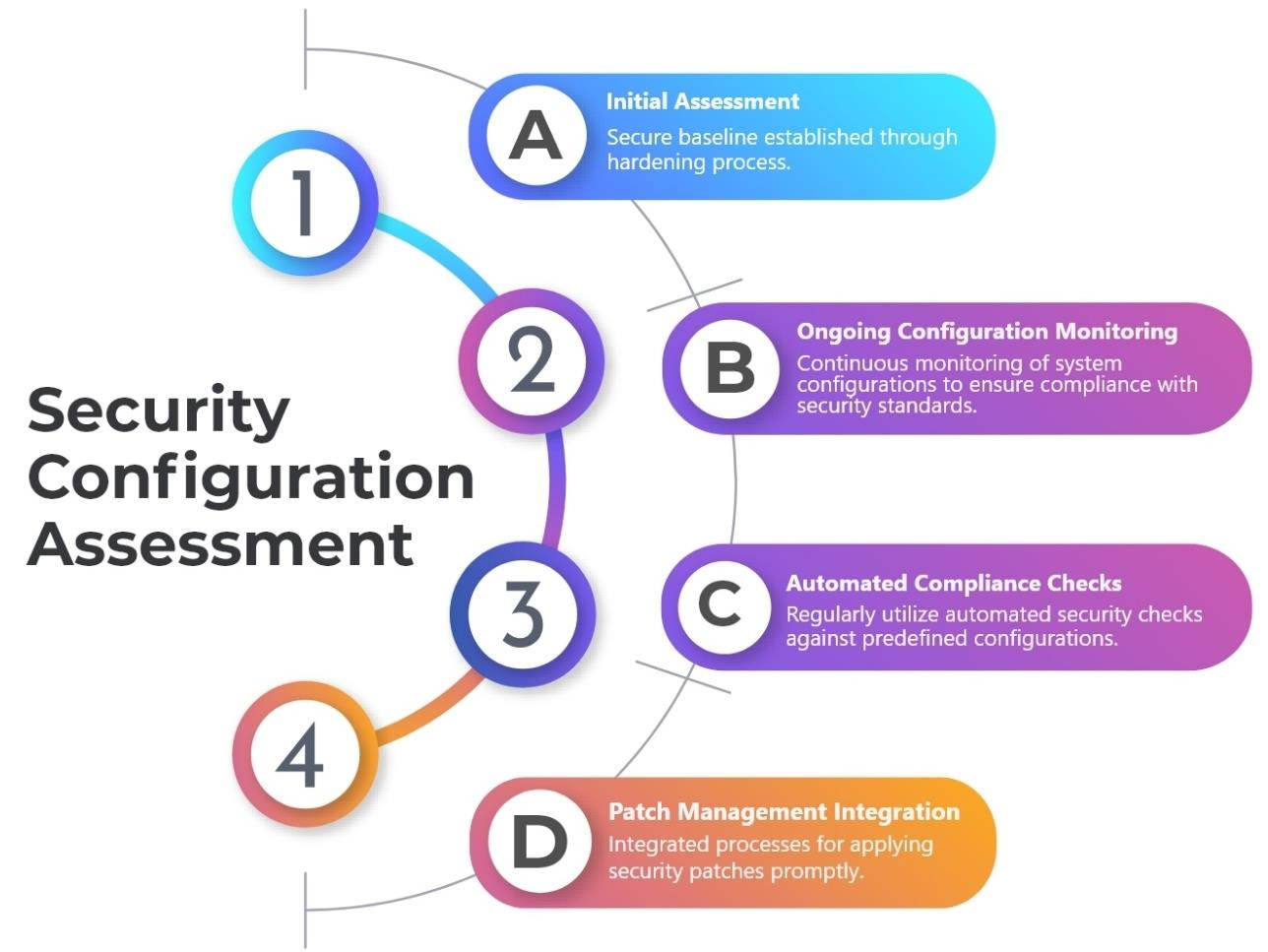

4.7.2 Security Configuration Assessment

4.7.3 Alert & Notification Processes

| Aspects | Details |

|---|---|

| Real-time Alerts | Security incidents detected and alerted immediately |

| Escalation Protocols | Well-defined escalation protocols for alerts, tailored to their severity |

| Incident Classification | Categorize incidents to ensure appropriate responses. |

| Notification Channels | Stakeholder notification via multiple channels (e.g., email, SMS). |

Table 4.8: Alert & Notification Processes

4.7.4 Reporting Insights

| Aspects | Details |

|---|---|

| Incident Reports | Concise incident reports on timelines, actions, and outcomes. |

| Trend Analysis | Identify security incident patterns and trends to inform proactive measures. |

| Continuous Improvement Recommendations | Analyze historical data to refine incident response. |

Table 4.9: Reporting Insights

4.8 Security Tools and Measures

Our security infrastructure is designed to proactively detect, prevent, and respond to threats, ensuring a secure environment for our clients. The configuration details of our security infrastructure are regularly reviewed and updated as part of our commitment to maintaining the highest standards of security.

4.8.1 IDS/IPS Configuration

| Tools | Configurations |

|---|---|

| Suricate | Snort-based IDS/IPS actively monitors network traffic for potential threats, with regularly updated rules for enhanced security. |

Table 4.10: IDS/IPS Configuration

4.8.2 Firewall

We employ IPtables, a robust firewall solution that controls and filters network traffic to ensure that only authorized communication passes through, providing an essential layer of protection against unauthorized access and potential cyber threats.

4.8.3 Antivirus

| Tools | Configurations |

|---|---|

| ClamAV | Regular updates to antivirus software safeguards servers against evolving malware and other malicious software. |

Table 4.11: Antivirus

4.8.4 Auto Vulnerability Assessment Platform

| Tools | Descriptions |

|---|---|

| Wazuh & OpenVAS | OpenVAS and Wazuh integrated for automated vulnerability assessment, scanning, reporting, and timely detection and response. |

Table 4.12: Auto Vulnerability Assessment Platform

4.8.5 Integrity Monitoring

| Tools | Configurations |

|---|---|

| Wazuh | Wazuh-based integrity monitoring continuously monitors file integrity and system changes to trigger alerts for prompt investigation and remediation of unauthorized modifications. |

Table 4.13: Auto Vulnerability Assessment Platform

4.9 Access Management

| Features | Details |

|---|---|

| IAM/PAM/Ticketing | Integrated Identity and Access Management (IAM), Privileged Access Management (PAM), and Ticketing solutions for holistic Identity Governance and Administration (IGA). |

| Single Sign-On (SSO) | Provide a seamless and secure Single Sign-On solution for simplified user access across Meghna Cloud services. |

| Identity Brokering | Social login and identity brokering are enabled for convenient and secure user authentication. |

| User Federation | n Centralized user management and authentication for efficient, multisystem access control. |

| Admin Console | Granular user access and system configuration controls via a robust administrative console. |

| Account Console | Secure, user-friendly self-service console for account management. |

| Protocols | Secure and standardized communication and access are assured through adherence to industry-standard protocols. |

Table 4.14: Access Management

4.10 Inventory and User Behavior Analysis

| Features | Key Points |

|---|---|

| Inventory Management | All-inclusive inventory of data systems. |

| Systematic inventory data management. | |

| Real-time asset tracking and monitoring. | |

| Integrates with security incident management for enhanced accuracy. | |

| User Behavior Analysis | Advanced threat analysis by monitoring user behavior. |

| Continuous anomaly detection through activity analysis. | |

| Identify patterns and anomalies in user behavior. | |

| Integration with threat intelligence enables proactive threat mitigation | |

| Threat Analysis | In-depth analysis of identified threats and vulnerabilities. |

| Continuous risk and exploit monitoring. | |

| Collaborating with threat intelligence to provide up-to-date insights. | |

| Actionable threat intelligence for timely response. | |

| Activities Analysis | Network wide user activity monitoring and analysis. |

| Identify suspicious or unauthorized activities. | |

| Correlate threat intelligence with context to improve decisionmaking. | |

| Real-time monitoring and reporting of anomalous activities |

Table 4.15: Inventory and User Behavior Analysis

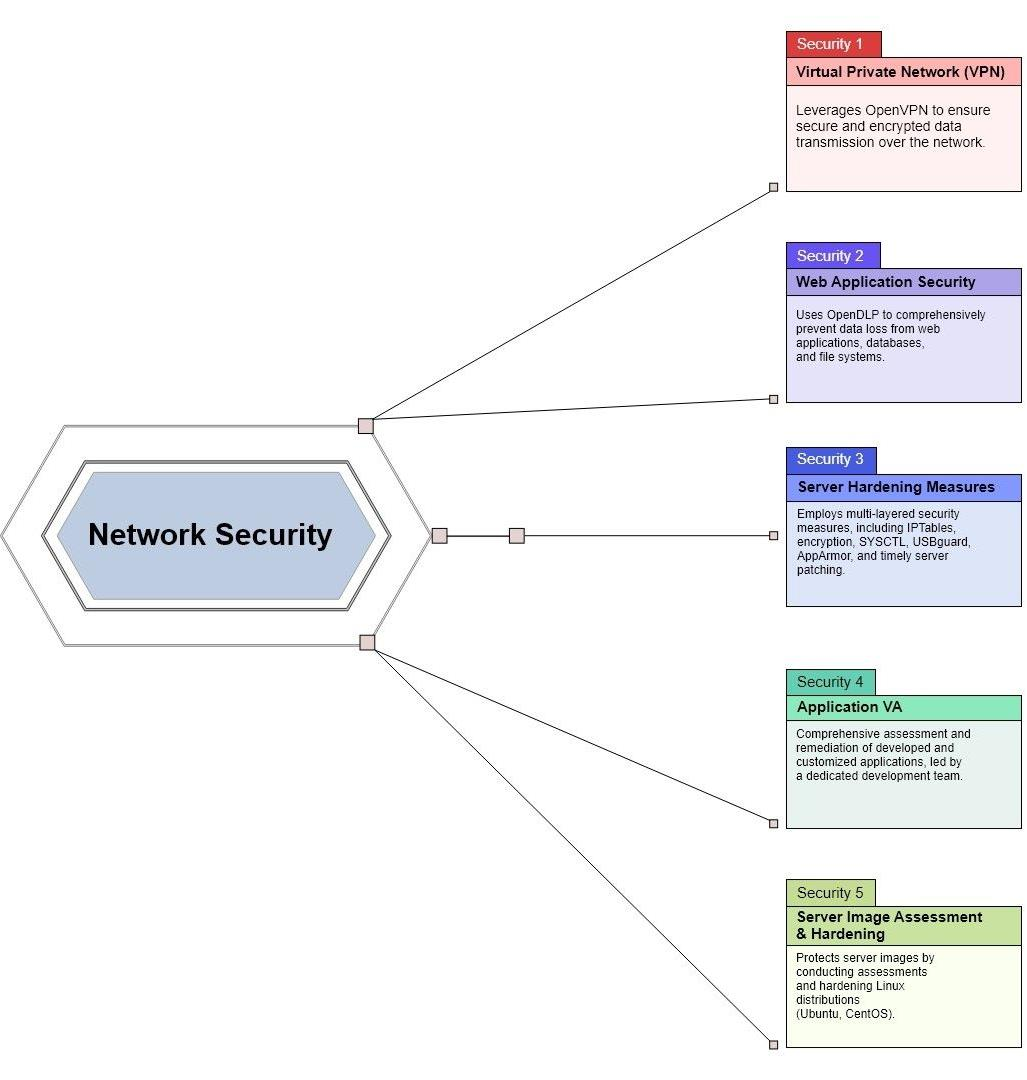

4.11 Network Security

4.12 Partnership

| Aspect | Partner Solution | Implementation Status | Remarks |

|---|---|---|---|

| Data Loss Prevention | OpenDLP | Ready to use | Integrated OpenDLP for robust data loss prevention, safeguarding confidentiality and ensuring compliance. |

| OTP Implementation | In Progress | OTP implementation underway to bolster access security | User authentication enhancements in progress. |

| DPI (Client Service Related) | Client Service Partner | Under Review | Collaborate with a specialized DPI service provider for holistic client service data packet inspection. |

| NDR or Cybersensor (Client Service Related) | Client Service Partner | Under Review | In partnership with a cybersecurity-sensor provider for advanced threat detection and response in client services. |

Table 4.16: Inventory and User Behavior Analysis

5. Cyber Security Operations Strategy & Design

Our team will evaluate and improve operations security using the following:

Review of Operations Center Controls

- Operational procedures and responsibilities

- Review documentation and evaluate guidance in regards to change management, capacity management, and separation of development, test, and production environments

Malware Detection and Prevention Controls

- Evaluate their level of effectiveness

- Data center backup strategy

- Evaluate whether backup procedures and methods (e.g., encryption) are effective both for on-and off-premises backup management

Audit Trails and Logging

- Review whether they are implemented effectively so that security reviews can be conducted to detect tampering, unauthorized access, and record user activities

- Installation of software on operational systems

- Ensure licensing requirements are met

- Implement a formal vulnerability management program to proactively test IT infrastructure for vulnerabilities that can be exploited and ensure that there is an effective process in place to manage corrective actions in collaboration with stakeholders.

- Prepare in advance for IT controls audits to avoid service disruptions

Reducing Information Security Risk and Ensuring Correct Computing

Our team will work with your organization to effectively reducing information security risk and ensuring correct computing, and implement a security program that include operational procedures, controls, and well-defined responsibilities. These are complemented and often necessitated by formal policies, procedures, and controls which are necessary to protect exchange of data and information through any type of communication media or technology.

We will briefly examine 7 key effective security control areas in this chapter:

- Operational Procedures and Responsibilities (important operational processes include: Change Management; Capacity Management; Separation of Development, Test, and Operations Environments)

- Protection from Malware

- Backups

- Logging and Monitoring

- Control of Operational Software

- Technical Vulnerability Management

- Information System Audit Considerations

Operational Procedures and Responsibilities

- To ensure the effective operation and security of information processing facilities.

- Documented Operating Procedures

Key Question: Do we have a procedure that are readily available, periodically updated, and consistently executed?

Operating procedures must be documented and readily available to the teams for which they have relevance. These procedures should cover methods that reduce the likelihood of introducing or enhancing risks due to accidental or ill-advised changes. Before authoring documentation, it is often very important to identify up-front who the intended audience is. For instance, documentation that is intended to have value for new hires (continuity) often requires a greater degree of detail than steps for staff who regularly perform operations tasks.

It is very important that operating procedures be treated as formal documents that are maintained and managed with version and approval processes and controls in place. As technology and our systems infrastructure changes, it is an absolute certainty that operational procedures will become out of date or inaccurate. By adopting formal documentation and review processes, we can help reduce the likelihood of outdated procedures that bring forth their own risks -- loss of availability, failure of data integrity, and breaches of confidentiality.

What Should We Document?

As mentioned before, the decision on what areas deserve documentation must be informed by an understanding of organizational risks including issues that have previously been observed. However, a good list of items to consider include the following items:

- Configuration and build procedures for servers, networking equipment, and desktops.

- Automated and Manual Information Processing

- Backup procedures

- System scheduling dependencies

- Error handling

- Change Management Processes

- Capacity Management & Planning Processes

- Support and escalation procedures

- System Restart and Recovery

- Special Output

- Logging & Monitoring Procedures

5.1 Change Management Procedures

Key Question: Do we have a formal method for classifying, evaluating, and approving changes?

Change management processes are essential for ensuring that risks associated with significant revisions to software, systems, and key processes are identified, assessed, and weighed in the context an approval process. It is critical that information security considerations be included as part of a change review and approval process alongside other objectives such as support and service level management.

What change should we evaluate and how to get started?

Change management is a broad subject matter (see resource below for additional reading), however some important considerations from an information security perspective include:

- Helping to ensure that changes are identified and recorded.

- Assessing and reporting on information security risks relevant to proposed changes.

- Helping classify changes according to the overall significance of the change in terms of risk.

- Helping establish or evaluate planning, testing, and “back out” steps for significant changes.

- Helping ensure that change communications is handled in structured manner (see RACI matrix below).

- Ensure that emergency change processes are well defined, communicated, and that security evaluation of these changes is also performed post-change.

Common Challenges

"Change Management Takes Too Much Time." Change management processes are notoriously susceptible to becoming overly complex. Staff who conduct changes are more likely to attempt to bypass change management processes they feel are too burdensome by intentionally classifying their changes at low levels or even not reporting them. If you are starting a Change Management program it is often helpful to first focus on modeling large scale changes and then working to find the right change level definitions which helps balance risk reduction with operational agility and efficiency.

Notes/Ideas

- Business Impact Analysis - Undertaking a Business Impact Analysis can often help strengthen change management operations by developing an understanding of both system and process level dependencies. This can help to evaluate and plan for less ostensible issues that emerge due to changes that impact system interactions (e.g. cascade failures).

5.2 Capacity Management Procedures

Key Question: Do we monitor resource utilization and establish projections of capacity requirements to ensure that we maintain service performance levels?

Formal capacity management processes involve conducting system tuning, monitoring the use of present resources and, with the support of user planning input, projecting future requirements. Controls in place to detect and respond to capacity problems can help lead to a timely reaction. This is often especially important for communications networks and shared resource environments (virtual infrastructure) where sudden changes in utilization can in poor performance and dissatisfied users.

To address this, regular monitoring processes should be employed to collect, measure, analyze, and predict capacity metrics including disk capacity, transmission throughput, service/application utilization.

Also, periodic testing of capacity management plans and assumptions (whether tabletop exercises or direct simulations) can help proactively identify issues that may need to be address to preserve a high level of availability of services for critical services.

Notes/Ideas

Emergency Operations - Many campuses who have experienced a crisis have seen dramatic surges of requests for information from institutional websites. If there are units at your institution who plan and manage emergency operations then partnering to evaluate the capacity management implications of varied emergency response scenarios can often be helpful.

Cloud Service Models + Resource Elasticity - Enterprise Cloud service models including PAAS, IAAS, and SAAS often offer attractive resource elasticity features (in some cases to automatically scale rapidly in response to demand). When considering these benefits and risk reduction capabilities, it is also important to understand and review other security considerations relevant to Cloud Computer (see Cloud Computing Security Hot Topic).

6. Protection From Malware

To protect the confidentiality, integrity, and availability (CIA) of information technology resources and data.

Key Question: Do we have effective security controls to prevent, detect, and recover from malware threats?

While malware prevention efforts can only be as effective as the level of protection offered by current anti-malware solutions in place---proactive measures to assess the effectiveness of anti-malware controls in place are both appropriate and necessary, as well as user awareness training. The ability to maintain centrally-managed and current protection updates is important, as is ensuring that users understand the importance of properly installed and utilized anti-malware solutions that they are provided. Malicious mobile code that is obtained from remote servers, transferred across networks and downloaded to computers (ActiveX controls, JavaScript, Flash animations) is a continuing area of concern as well. If identified as pertinent, technical provisions can be made to comply with guidelines and procedures that distinguish between authorized and unauthorized mobile code.

6.1 Backups

To ensure the integrity and availability of information processed and stored within information processing facilities.

Key Question: Do we make copies of information, software, and system images regularly and in accord with policy requirements?

System backups are a critical issue and the integrity and availability of important information and software should be maintained by making regular copies to other media. Risk assessments should be used to identify the most critical data. Develop well-defined procedures. Establish well-defined long term storage requirements and testing/business continuity planning.

6.2 Logging and Monitoring

Key Question: Do we have processes and methods to reliably record, store, monitor, and review system events?

Effective logging allows us to reach back in time to identify events, interactions, and changes that may have relevancy to the security of information resources. A lack of logs often means that we lose ability to investigate events (e.g. anomalies, unauthorized access attempts, excessive resource use) and perform root cause analysis to determine causation. In the context of this control area, logs can be interpreted very broadly to include automated and handwritten logs of administrator and operator activities taken to ensure the integrity of operations in information processing facilities, such as data and network centers.

How do we protect the value of log information?

Effective logging strategies must also consider how log data can be protected against tampering, sabotage, or deletion that devalues the integrity of log information. This usually involves consideration of role-based access controls that partition the ability to read and modify log data based on business needs and position responsibilities. In addition, timestamp information is extremely critical when performing correlation analysis between log sources. One essential control needed to assist with this is ensuring that institutional systems all have their clocks synchronized to a common source (often achieve via NTP server) so that timelining of events can be performed with high confidence.

What should we log?

The question of what types of events to log must take into consideration a number of factors including relevant compliance obligations, institutional privacy policies, data storage costs, access control needs, and the ability to monitor and search large data sets in an appropriate time frame. When considering your overall logging strategy, it can very often be helpful to “work backwards”. Rather than initially attempting to catalog all event types, it can be useful to frame investigatory questions beginning with those issues that occur on regular basis or have a potential to be associated with significant risk events (e.g. abuse/attacks on ERP systems). These questions can then lead to a focused review of the security event data that has the most relevance to these particular questions and issues. Ideally events logs should include key information including:

- User IDs, System Activities; Dates, Times and Details of Key Events

- Device identity or location, Records of Successful and Rejected System Access Attempts;

- Records of Successful and Rejected Resource Access Attempts; Changes to System Configurations; Use of Privileges,

- Use of System Utilities and Applications; Files Accessed and the Kind of Access; Network Addresses and Protocols;

- Alarms raised by the access control system, Activation and De-activation of Protection systems, such AV & IDS

6.3 Control of Operational Software

Make sure to establish and maintain documented procedures to manage the installation of software on operational systems. Operational system software installations should only be performed by qualified, trained administrators. Updates to operational system software should utilize only approved and tested executable code. It is ideal to utilize a configuration control system and have a rollback strategy prior to any updates. Audit logs of updates and previous versions of updated software should be maintained.

Third parties that require access to perform software updates should be monitored and access removed once updates are installed and tested.

6.4 Technical Vulnerability Management

Technical vulnerabilities can introduce significant risks to higher-education institutions that can directly lead to costly data leaks or data breach events. Even with this fact is widely acknowledged, developing frameworks for detecting, evaluating, and rapidly addressing vulnerabilities is often a significant challenge. To help us approach this section it is often useful to look at 5 critical success factors (below) that drive effective threat and vulnerability management approaches.

Knowing What We Have (Asset Inventory): It is imperative to have an up-to-date inventory of your asset groups to allow for action to be taken once a technical vulnerability if reviewed and a mitigation strategy agreed on. These inventories also lend us the ability identify and prioritize “high risk systems” where the impact of technical vulnerabilities can be greatest.

Establishing Clear Authority to Review Vulnerabilities: Because probing a network for vulnerabilities can disrupt systems and expose private data, higher education institutions need a policy in place and buy-in from the top before performing vulnerability assessments. Many organizations address this issue in their acceptable use policies, making consent to vulnerability scanning a condition of connecting to the network. Additionally, it is important to clarify that the main purpose of seeking vulnerabilities is to defend against outside attackers. (A public health metaphor may help people understand the need for scanning-we are looking for symptoms of illness.) There is also a need for policies and ethical guidelines for those who have access to data from vulnerability scans. These individuals need to understand the appropriate action when illegal materials are found on their systems during a vulnerability scan. The appropriate action will vary between institutions (for example, public regulations in Georgia versus public regulations in California). Some organizations may want to write specifics into policy, whereas others leave policy more open to interpretation and address specific issues through procedures such as consulting legal counsel.

Vulnerability Awareness and Context: It is important that we keep up-to-date with industry notices about technical vulnerabilities and evaluate risk and mitigation strategies. Vulnerability notices are released on a daily basis and a plan needs to be in place for how to track, analyze, and prioritize our efforts.

Risk and Process Integration: Technical vulnerability review is an operational aspect of an overall information security risk management strategy. As such, vulnerabilities must be analyzed in the context of risks including those related to the potential for operational disruption. These risks must also have a clear reporting path that allows for appropriate awareness of risk factors and exposure. Lastly, vulnerability management should also integrate into change management and incident management processes to inform the review and execution of these areas.

System and Application Lifecycle Integration: The review of vulnerabilities also must be integrated in system release and software development planning to ensure that potential weaknesses are identified early to both lower risks and manage costs of finding these issues prior to identified release dates. (Three approaches to managing technical vulnerabilities in application software are described in the Application Security and Software Development Life Cycle presentation from the 2010 Security Professionals Conference.)

6.5 Technical Vulnerability Scanning

Depending on the size and structure of the institution, the approach to vulnerability scanning might differ. Small institutions that have a good understanding of IT resources throughout the enterprise might centralize vulnerability scanning. Larger institutions are more likely to have some degree of decentralization, so vulnerability scanning might be the responsibility of individual units. Some institutions might have a blend of both centralized and decentralized vulnerability assessment. Regardless, before starting a vulnerability scanning program, it is important to have authority to conduct the scans and to understand the targets that will be scanned.

Vulnerability scanning tools and methods are often somewhat tailored to varied types of information resources and vulnerability classes. The table below shows several important vulnerability classes and some relevant tools.

Common Types of Technical Vulnerabilities

- Relevant Assessment Tools

- Application Vulnerabilities

- Web Application Scanners (static and dynamic), Web Application Firewalls

- Network Layer Vulnerabilities

- Network Vulnerability Scanners, Port Scanners, Traffic Profilers

- Host/System Layer Vulnerabilities, Authenticated Vulnerability Scans, Asset and Patch Management Tools, Host Assessment and Scoring Tools

Common Challenges

"Scanning Can Cause Disruptions." IT operations teams are quite reasonably very sensitive about how vulnerability scans are conducted and keen to understand any potential for operational disruptions. Often legacy systems and older equipment can have issues even with simple network port scans; To help with this issue, it can often be useful to build confidence in scanning process by partnering with these teams to conduct risk evaluations before initiating or expanding a scanning program. It is also often important to discuss the “scan windows” when these vulnerability assessments will occur to ensure that they do not conflict with regular maintenance schedules.

"Drowning In Vulnerability Data and False Positives." Technical vulnerability management practices can produce very large data-sets. It is important to realize that just because a tool indicates that a vulnerability is present that there are frequently follow-up evaluations needed validate these findings. Reviewing all of these vulnerabilities is usually infeasible for many teams; For this reason, it is very important to develop a vulnerability prioritization plan before initiating a large number of scans. These priority plans should be risk driven to ensure that teams are spending their time dealing with the most important vulnerabilities in terms of both likelihood of exploitation and impact.

6.6 Information Systems Audit Considerations

It is important to ensure that all IT controls and information security audits are planned events, rather than reactive 'on-the-spot' challenges. Most organization undergo a series of audits each year ranging from financial IT controls reviews to targeted assessments of critical systems. Audits that include testing activities can prove disruptive to campus users if any unforeseen outages occur as a result of testing or assessments.

Through working with campus leadership, it should be possible to determine when audits will occur and obtain relevant information in advance about the specific IT controls that will be examined or tested.

Develop an 'audit plan' for each audit that provides information relevant to each system and area to be assessed. These audit plans should take into consideration:

- Asset Inventory with contact information for system administrators/owners;

- Requirements for testing/maintenance windows;

- Information about backups (if applicable) in case systems later need to be restored due to unplanned outages;

- Checklists or other materials provided in advance by auditors, etc.

If applicable, work with IT and campus departments to provide audit preparation services to ensure that everyone understands their roles in the audit and how to respond to auditors' questions, issues and concerns. Protecting sensitive information during audits is critical, and documents provided to auditors should be recovered, if possible, shortly before audits are completed.

Any and all audit activity, to assess an operational system, should always be managed to minimize any impact on the system during required hours of operation. Any testing of operational systems that could pose an adverse effect to the system should be conducted during off hours.

7. Patch Management Process

Step 1: Discovery

First, we will ensure we have a comprehensive network inventory. At the most basic level, this includes understanding the types of devices, operating systems, OS versions, and third-party applications. Many breaches originate because there are neglected or forgotten systems that IT has lost track of. MSPs should be utilizing tools that enable them to scan their clients’ environments and get comprehensive snapshots of everythingon the network.

Step 2: Categorization

Segment-managed systems and/or users according to risk and priority. Examples couldbe by machine type (server, laptop, etc.), OS, OS version, user role, etc. This will allowyou to create more granular patching policies instead of taking a one-policy-fits-all approach.

Step 3: Patch management policy creation

Create patching criteria by establishing what will be patched and when, under what conditions. For example, you may want to ensure some systems/users are patched morefrequently and automatically than others (the patching schedule for laptop end users may be weekly while patching forservers may be less frequent and more manual). Youmay also want to treat different types of patches differently, with some having a quickeror more extensive rollout process (think browser updates vs. OS updates; critical vs. non-critical updates, for example). Finally, you’ll want to identify maintenance windows to avoid disruption (take into account time zones for “follow the sun” patching, etc.) and create exceptions.

Step 4: Monitor for new patches and vulnerabilities

Understand vendor patch release schedules and models, and identify reliable sources for timely vulnerability disclosures. Create a processfor evaluating emergency patches.

Step 5: Patch testing

Create a testing environment or at the very least a testing segment to avoid being caughtoff guard by unintended issues. That should include creating backups for easy rollback if necessary. Validate successful deployment and monitor for incompatibility or performance issues.

Step 6: Configuration management

Document any changes about to be made via patching. This will come in handy shouldyou run into any issues with patch deployment beyond the initial test segment or environment.

Step 7: Patch rollout

Follow your established patch management policies you created in step 3.

Step 8: Patch auditing

Conduct a patch management audit to identify any failed or pending patches, and be sure to continue monitoring for any unexpected incompatibility or performance issues.It’s also a good idea to tap specific end users who can help by being additional eyes andears.

Step 9: Reporting

Produce a patch compliance report we can share with our clients to gain visibility intoour work.

Step 10: Review, improve, and repeat

Establish a cadence for repeating and optimizing steps 1-9. This should include phasingout or isolating any outdated or unsupported machines, reviewing our policies, and revisiting exceptions to verify whetherthey still apply or are necessary.

8. Process of Handling an Incident

The incident response process has several phases. The initial phase involves establishing and training an incident response team, and acquiring the necessary tools and resources. During preparation, the organization also attempts to limit the number of incidents that will occur by selecting and implementing a set of controls based on the results of risk assessments. However, residual risk will inevitably persist after controls are implemented. Detection of security breaches is thus necessary to alert the organization whenever incidents occur. In keeping with the severity of the incident, the organization can mitigate the impact of the incident by containing it and ultimately recovering from it. During this phase, activity often cycles back to detection and analysis—for example, to see if additional hosts are infected by malware while eradicating a malware incident.After the incident is adequately handled, the organization issues a report that details the cause and cost of the incident and the steps the organization should take to prevent future incidents. This section describes the majorphases of the incident response process—preparation, detection and analysis, containment, eradication and recovery, and post-incident activity—in detail.

8.1 Preparation

Incident response methodologies typically emphasize preparation—not only establishing an incident response capability so that the organization is ready to respond to incidents, but also preventing incidents by ensuring that systems, networks, and applications are sufficiently secure. Although the incident response team is not typically responsible for incident prevention, it is fundamental to the success of incident response programs. This section provides basic advice on preparing to handle incidents and on preventing incidents.

8.1.1 Preparing to Handle Incidents

The lists below provide examples of tools and resources available that may be of value during incident handling. These lists are intended to be a starting point for discussions about which tools and resources anorganization’s incident handlers need. For example, smartphones are one way to have resilient emergency communication and coordination mechanisms. An organization should have multiple (separate and different) communication and coordination mechanisms in case of failure of one mechanism.

8.1.1.1 Incident Handler Communications and Facilities

Contact information for team members and others within and outside the organization (primary and backup contacts), such as law enforcement and other incident response teams; information may include phone numbers, email addresses, public encryption keys (in accordance with the encryption software described below), and instructions for verifying the contact’s identity

On-call information for other teams within the organization, including escalation information

Incident reporting mechanisms, such as phone numbers, email addresses, online forms, and secure instant messaging systems that users can use to report suspected incidents; at least one mechanism should permit people to report incidents anonymously

Issue tracking system for tracking incident information, status, etc.

Smartphones to be carried by team members for off-hour support and onsite communications

Encryption software to be used for communications among team members, within the organization and with external parties; for Federal agencies, software must use a FIPS-validated encryption algorithm

War room for central communication and coordination; if a permanent war room is not necessary or practical, the team should create a procedure for procuring a temporary war room when needed

Secure storage facility for securing evidence and other sensitive materials

8.1.1.2 Incident Analysis Hardware and Software

Digital forensic workstations and/or backup devices to create disk images, preserve log files, and save other relevant incident data Laptops for activities such as analyzing data, sniffing packets, and writing reports Spare workstations, servers, and networking equipment, or the virtualized equivalents, which may be used for many purposes, such as restoring backups and trying out malware Portable printer to print copies of log files and other evidence from non-networked systems Packet sniffers and protocol analyzers to capture and analyze network traffic Digital forensic software to analyze disk images Removable media with trusted versions of programsto be used to gather evidence from systems Evidence gathering accessories, including hard-bound notebooks, digital cameras, audio recorders, chain of custody forms, evidence storage bags and tags, and evidence tape, to preserve evidence for possible legal actions

8.1.1.3 Incident Analysis Resources

Port lists, including commonly used ports and Trojan horse ports Documentation for OSs, applications, protocols, and intrusion detection and antivirus products Network diagrams and lists of critical assets, such as database servers Current baselines of expected network, system, and application activity Cryptographic hashes of critical files to speed incident analysis, verification, and eradication

8.1.1.4 Incident Mitigation Software

Accessto images of clean OS and application installations for restoration and recovery purposes Many incident response teams create a jump kit, which is a portable case that contains materials that maybe needed during an investigation. The jump kit should be ready to go at all times. Jump kits contain many of the same items listed in the bulleted lists above. For example, each jump kit typically includes a laptop, loaded with appropriate software (e.g., packet sniffers, digital forensics). Other important materials include backup devices, blank media, and basic networking equipment and cables. Because the purpose of having a jump kit is to facilitate faster responses, the team should avoid borrowing items from the jump kit.

Each incident handler should have access to at least two computing devices (e.g., laptops). One, such asthe one from the jump kit, should be used to perform packet sniffing, malware analysis, and all other actions that risk contaminating the laptop that performs them. This laptop should be scrubbed and all software reinstalled before it is used for another incident. Note that because this laptop is special purpose,it is likely to use software other than the standard enterprise tools and configurations, and whenever possible the incident handlers should be allowed to specify basic technical requirements for these special-purpose investigative laptops. In addition to an investigative laptop, each incident handler should also have a standard laptop, smart phone, or other computing device for writing reports, reading email, and performing other duties unrelated to the hands-on incident analysis.

Exercises involving simulated incidents can also be very useful for preparing staff for incident handling; see NIST SP 800-84 for more information on exercises and Appendix A for sample exercise scenarios.

8.1.2 Preventing Incidents

Keeping the number of incidents reasonably low is very important to protect the business processes of the organization. If security controls are insufficient, higher volumes of incidents may occur, overwhelming the incident response team. This can lead to slow and incomplete responses, which translate to a larger negative business impact (e.g., more extensive damage, longer periods of service and data unavailability).

It is outside the scope of this document to provide specific advice on securing networks, systems, and applications. Although incident response teams are generally not responsible for securing resources, they can be advocates of sound security practices. An incident response team may be able to identify problemsthat the organization is otherwise not aware of; the team can play a key role in risk assessment and training by identifying gaps. Other documents already provide advice on general security concepts and operating system and application-specific guidelines. The following text, however, provides a brief overview of some of the main recommended practicesfor securing networks, systems, and applications:

Risk Assessments. Periodic risk assessments of systems and applications should determine what risks are posed by combinations of threats and vulnerabilities. This should include understanding the applicable threats, including organization-specific threats. Each risk should be prioritized, and the risks can be mitigated, transferred, or accepted until a reasonable overall level of risk is reached. Another benefit of conducting risk assessments regularly is that critical resources are identified, allowing staff to emphasize monitoring and response activities for those resources.

Host Security. All hosts should be hardened appropriately using standard configurations. In additionto keeping each host properly patched, hosts should be configured to follow the principle of least privilege—granting users only the privileges necessary for performing their authorized tasks. Hosts should have auditing enabled and should log significant security-related events. The security of hosts and their configurations should be continuously monitored. Many organizations use Security Content Automation Protocol (SCAP) expressed operating system and application configuration checklists to assist in securing hosts consistently and effectively.

Network Security. The network perimeter should be configured to deny all activity that is not expressly permitted. This includes securing all connection points, such as virtual private networks (VPNs) and dedicated connections to other organizations.

Malware Prevention. Software to detect and stop malware should be deployed throughout the

organization. Malware protection should be deployed at the host level (e.g., server and workstation operating systems), the application server level (e.g., email server, web proxies), and the application client level (e.g., email clients, instant messaging clients).

User Awareness and Training. Users should be made aware of policies and procedures regarding appropriate use of networks, systems, and applications. Applicable lessons learned from previous incidents should also be shared with users so they can see how their actions could affect the organization. Improving user awareness regarding incidents should reduce the frequency of incidents. IT staff should be trained so that they can maintain their networks, systems, and applications in accordance with the organization’s security standards.

8.2 Detection and Analysis

8.2.1 Attack Vectors

Incidents can occur in countless ways, so it is infeasible to develop step-by-step instructions for handlingevery incident. Organizations should be generally prepared to handle any incident but should focus on being prepared to handle incidents that use common attack vectors. Different types of incidents merit different response strategies. The attack vectors listed below are not intended to provide definitive classification for incidents; rather, they simply list common methods of attack, which can be used as a basis for defining more specific handling procedures.

External/Removable Media: An attack executed from removable media or a peripheral device—for example, malicious code spreading onto a system from an infected USB flash drive.

Attrition: An attack that employs brute force methods to compromise, degrade, or destroy systems, networks, or services (e.g., a DDoS intended to impair or deny access to a service or application; a brute force attack against an authentication mechanism, such as passwords, CAPTCHAS, or digital signatures).

Web: An attack executed from a website or web-based application—for example, a cross-sitescripting attack used to steal credentials or a redirect to a site that exploits a browser vulnerability and installs malware.

Email: An attack executed via an email message or attachment—for example, exploit code disguised as an attached document or a link to a malicious website in the body of an email message.